I’ve made a couple of experiments with Tetra. Right now the code that manages disconnection of live sources (say, someone pulls the cable and walks away with one of our cameras) kind of works, it certainly does on my system but with differnet sets of libraries sometimes the main gst pipeline just hangs there and it really bothers me that I’m unable to get it right.

So I decided to really split it on a core that does the mixing (either manually or automatic) and different pipelines that feed it. Previously I had success using the inter elements (with interaudiosrc hacked so its latency is acceptable) to have another pipeline with video from a file mixed with live content.

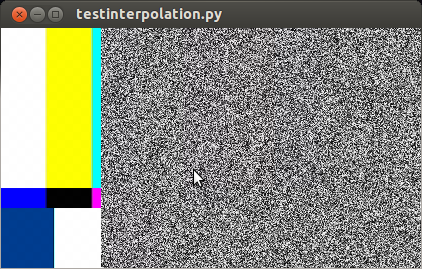

Using the inter elements and a dedicated pipeline for each camera worked fine, the camera pipeline could die or dissapear and the mixing pipeline churned happily. The only downside is that it puts some requirements on the audio and video formats.

Something that I wasn’t expecting was that cpu utilization lowered, before I had two threads using 100% and 30% (and many others below 10%) of cpu time and both cores on average at 80% load. With different pipelines linked with inter elements I had two threads, one at 55% and a couple of others near 10%; both cores a tad below 70%.

Using shmsrc / shmsink yielded similar performance results but as a downside it behaved just like the original regarding the sources being disconnected, so for now I’m not considering them to ingest video. On the other hand latency was imperceptible as expected.