This is more or less a direct translation of the examples found at gstreamer/tests/examples/controller/*.c to their equivalents using the gi bindings for Gstreamer under Python. The documentation can be found here. Reading the source also helps a lot.

The basic premise is that you can attach a controller to almost any property of an object, set an interpolation function and give it pairs of (time, value) so they are smoothly changed. I’m using a pad as a target instead of an element just because it fits my immediate needs but it really can be any Element.

First you need to import Gstreamer and initialize it:

#!/usr/bin/python

import gi

import sys

from gi.repository import GObject

gi.require_version('Gst', '1.0')

from gi.repository import Gst

from gi.repository import GstController

from gi.repository import Gtk

from gi.repository import GLib

GObject.threads_init()

Gst.init(sys.argv)

Then create your elements. This is by no means the best way but lets me cut a bit on all the boilerplate.

p = Gst.parse_launch ("""videomixer name=mix ! videoconvert ! xvimagesink

videotestsrc pattern="snow" ! videoconvert ! mix.sink_0

videotestsrc ! videoconvert ! mix.sink_1

""")

m = p.get_by_name ("mix")

s0 = [pad for pad in m.pads if pad.name == 'sink_0'][0]

s0.set_property ("xpos", 100)

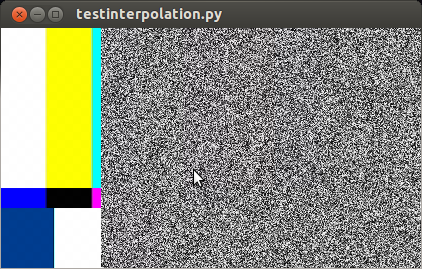

Here I created two test sources, one with bars and another with static that also has an horizontal offset. If we were to start the pipeline right now ( p.set_state (Gst.State.PLAYING) ) we would see something like this:

So far it works. Now I’d like to animate the alpha property of s0 (the sink pads of a videomixer have interesting properties like alpha, zorder, xpos and ypos). First we create a control source and set the interpolation mode:

cs = GstController.InterpolationControlSource()

cs.set_property('mode', GstController.InterpolationMode.LINEAR)

Then we create a control binding for the property we want to animate and add it to our element:

cb = GstController.DirectControlBinding.new(s0, 'alpha', cs)

s0.add_control_binding(cb)

It is worth noting that the same control source can be used with more than one control binding.

Now we just need to add a couple of points and play:

cs.set(0*Gst.SECOND, 1)

cs.set(4*Gst.SECOND, 0.5)

p.set_state (Gst.State.PLAYING)

If you are not running this from the interpreter remember to add GObject.MainLoop().run() , otherwise the script will end instead of keep playing. Here I’ve used absolute times, to animate in the middle of a playing state you need to get the current time and set the points accordingly, something like this will do most of the cases:

start = p.get_clock().get_time() # XXX: you better check for errors

end = start + endtime*Gst.SECOND

Avoiding too much bookkeeping

You can get the controller and control source of an element with:

control_binding = element.get_control_binding('property')

if control_binding:

control_source = control_binding.get_property('control_source')